Highlights:

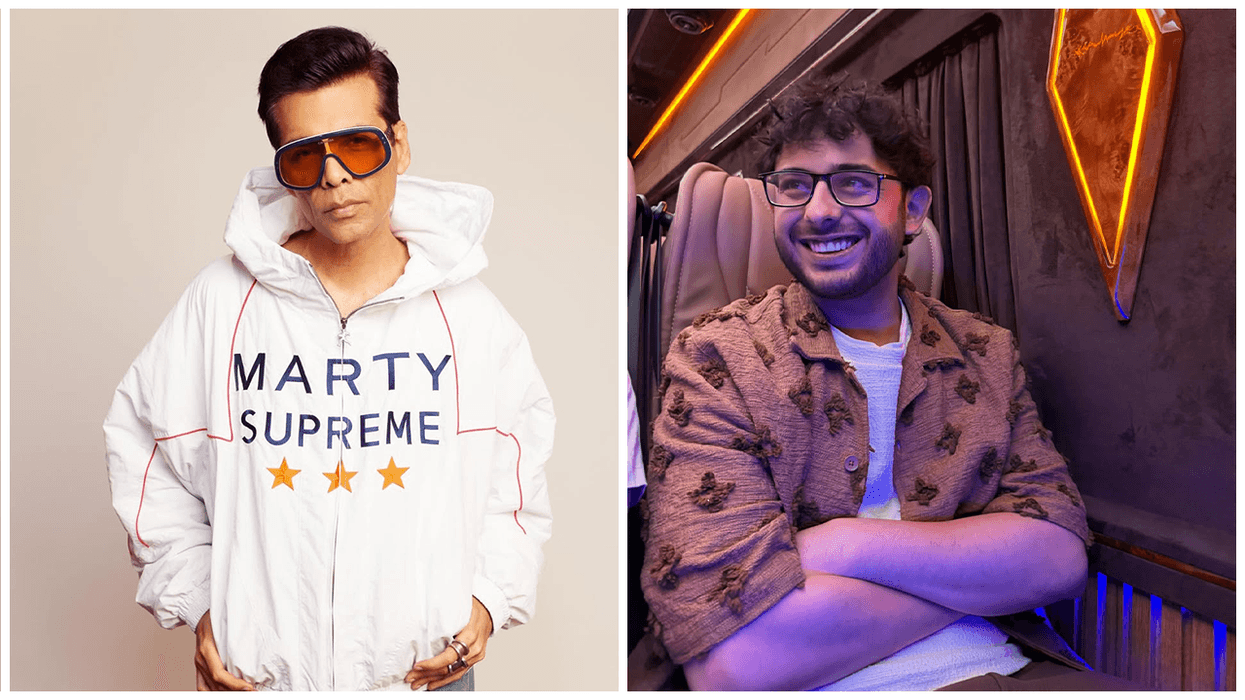

- YouTube launches a tool to detect manipulated videos featuring popular creators.

- The system scans uploads for altered faces or voices and alerts affected creators.

- The technology underscores the growing tension between digital privacy and automated content moderation.

- Experts warn the move reflects a broader trend of platforms using the same tools that caused problems to now manage them.

A paradox of oversight

YouTube’s new likeness detection system is designed to spot videos where creators’ faces or voices have been manipulated without permission. Once identified, the platform alerts the affected creator, giving them the chance to take action.

While the system is framed as a safeguard, it also highlights a striking paradox: the very technology that enabled deepfakes and digital impersonation is now being used to police them. This illustrates a broader shift in the digital landscape, where automated tools are both the source of the problem and a critical part of the solution.

Privacy in focus

Deepfake technology has made it easier than ever to create realistic but unauthorized videos of celebrities, influencers, and public figures. YouTube’s detection tool addresses this misuse, but it also emphasizes how little control creators have over their digital likenesses.

Even with detection, manipulated content can spread quickly before intervention, meaning a creator’s image or voice can already exist online in ways they cannot fully control. The system provides protection, but it does not restore complete control over digital identity.

Cleaning up the mess

YouTube is not alone. Companies like Meta and TikTok are also introducing measures to label or restrict synthetic content, reflecting a growing reliance on automation to manage risks created by earlier automation.

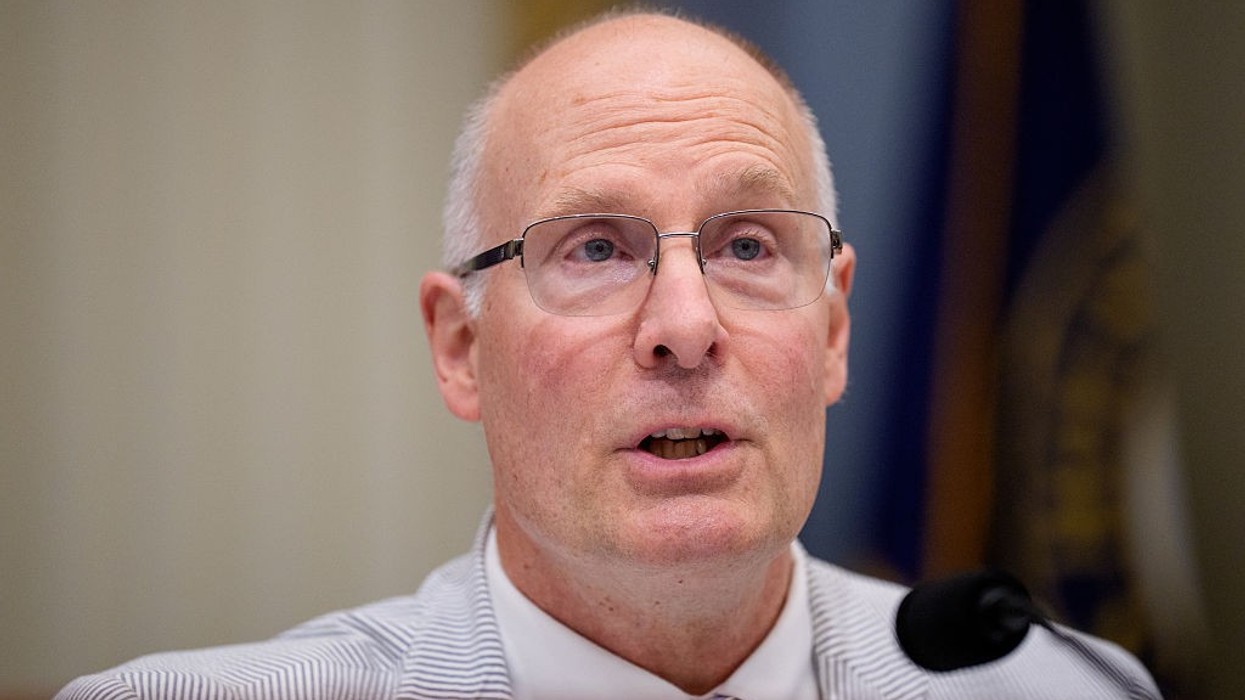

Experts call this a self-regulating cycle, where platforms must deploy the same tools that contributed to content manipulation to monitor and protect users. While these systems help reduce harm, they also concentrate control over digital identity in the hands of large tech companies.

Limits of protection

Detection tools are not foolproof. Parody, transformative content, and human error can lead to misidentification, and flagged videos still require human review. Moreover, scanning large amounts of personal data raises concerns about privacy, consent, and transparency.

For creators, the tool offers reassurance but also underscores a reality: much of their digital presence can already exist beyond their control. In a world where machines monitor machines, the line between protection and surveillance is increasingly blurred.