Highlights

- OpenAI plans to allow adult users to access more human-like behaviors and adult content in ChatGPT.

- Critics warn this could lead to mental health risks and unhealthy emotional attachments.

- The changes raise questions about AI’s role in human relationships and the company’s responsibility.

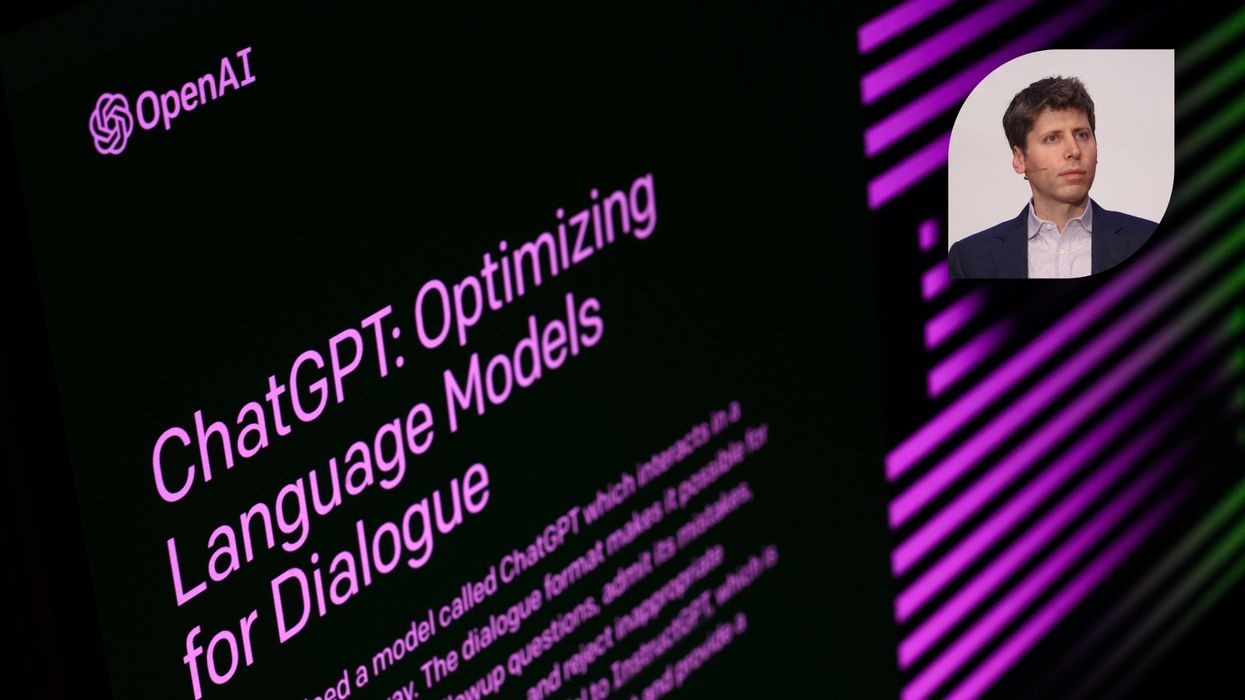

OpenAI expands ChatGPT for adult interactions

OpenAI has announced plans to update ChatGPT to behave more like a human companion. The new version, expected later this year, will allow verified adult users to access features such as playful conversation, emotional responses, and adult-oriented content, including erotica.

CEO Sam Altman explained that ChatGPT was originally “pretty restrictive” to mitigate mental health risks. With new safeguards in place, he said the company is now relaxing restrictions to let adults experience a more human-like AI interaction.

Criticism over potential mental health risks

The plan has drawn criticism from advocacy groups, including the National Center on Sexual Exploitation (NCOSE), which warned that sexualized AI chatbots could cause real mental health harms.

Haley McNamara, NCOSE executive director, said these tools carry risks even for adults and urged OpenAI to reconsider rolling out adult content. “If OpenAI truly cares about user well-being, it should pause any plans to integrate this so-called ‘erotica’ into ChatGPT,” she said.

Adult choice and company responsibility

Altman emphasized that the changes are meant to treat adults like adults, while keeping safeguards in place. He added that the adult-oriented features are optional and designed to give users more freedom in how ChatGPT interacts with them.

The updates raise broader questions about emotional boundaries, mental health, and human relationships. Could AI companions replace or supplement real-life friendships or romantic experiences? And how much responsibility does OpenAI have if users develop unhealthy attachments to their AI interactions?

Implications for technology and human connection

As AI becomes increasingly human-like, these developments underscore a new frontier in technology and intimacy. Experts say that while adult-oriented AI can offer companionship and creative engagement, it also poses questions about emotional dependency, ethical boundaries, and societal impacts that have yet to be fully addressed.